At Salesforce, we are guided by our five core values: trust, customer success, innovation, equality, and sustainability. We are also deeply committed to the responsible development and deployment of our technology, driven by our Office of Ethical and Humane Use.

One of the emerging technologies with great potential to improve the state of the world is artificial intelligence (AI) as it augments human intelligence, amplifies human capabilities, and provides actionable insights that drive better outcomes for our employees, customers, and partners.

We believe that the benefits of AI should be accessible to everyone. But it is not enough to deliver only the technological capabilities of AI, we have an important responsibility to ensure that AI is safe and inclusive for all. We take that responsibility seriously and are committed to providing our employees, customers, and partners with the tools they need to develop and use AI safely, accurately, and ethically.

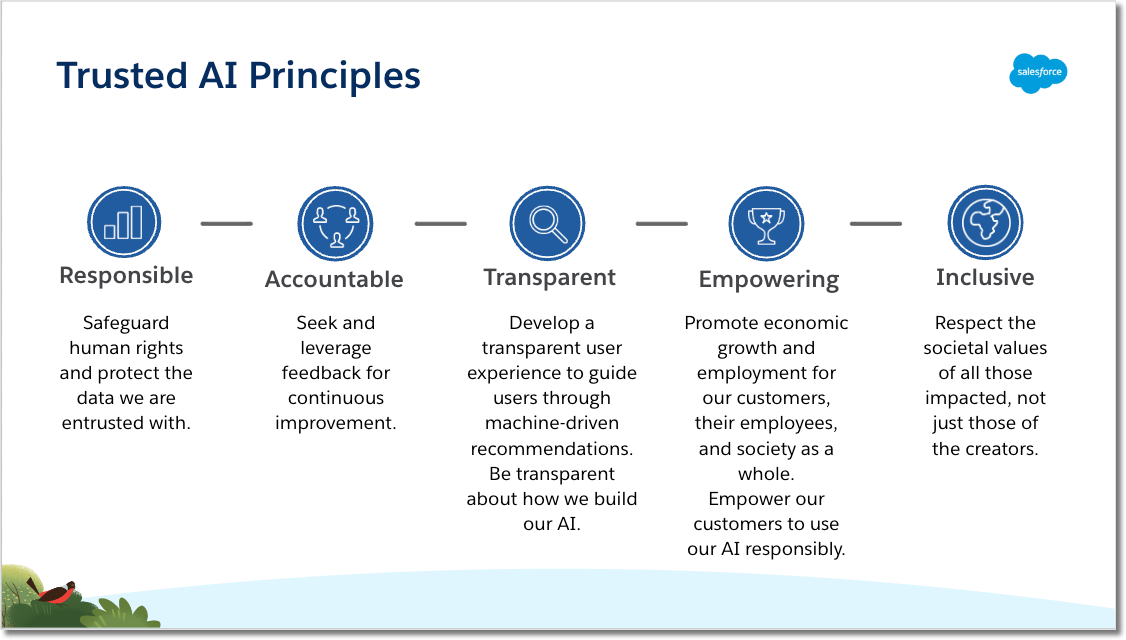

Our Principles

In 2018, we began articulating our trusted AI principles and wanted to ensure that they were specific to Salesforce’s products, use cases, and customers. It was a year-long journey of soliciting feedback from individual contributors, managers, and executives across the company in every organisation including engineering, product development, UX, data science, legal, equality, government affairs, and marketing. Executives across clouds and roles approved the principles including our then co-CEOs Marc Benioff and Keith Block. As we discuss in our Ethical AI Maturity Model, though that journey may feel long, it is an important formative experience in ensuring that everyone in the company has contributed, understands their responsibility for living those principles, and has bought into implementing them in their daily work.

Responsible

Accountable

Transparent

Empowering

Inclusive

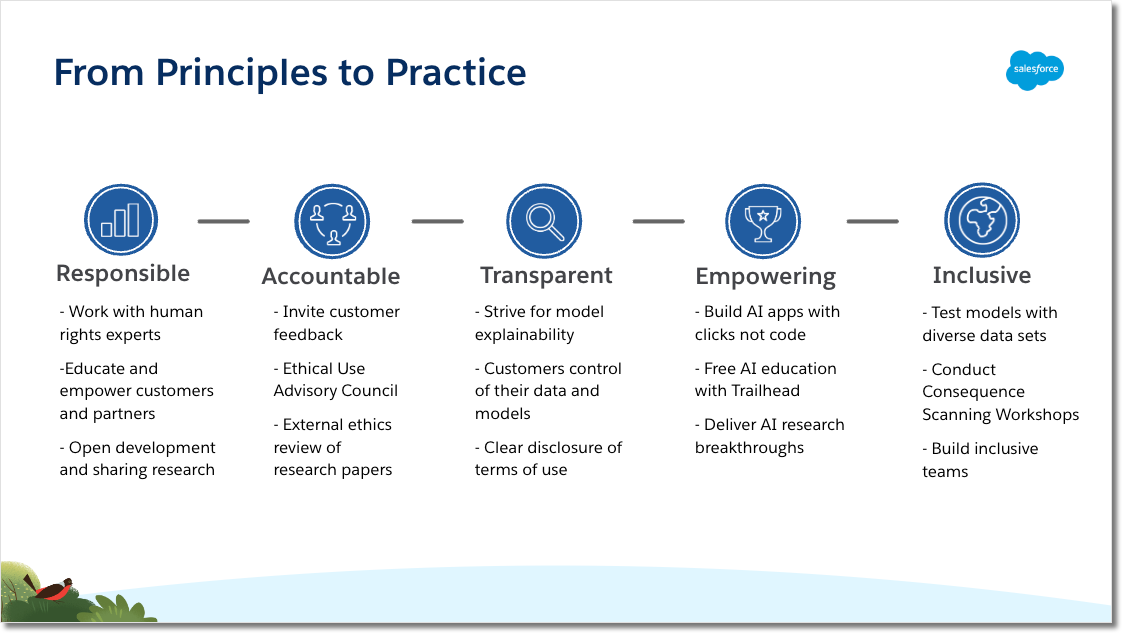

From Principles to Practice

It’s not enough to have a set of principles. Ethics is a team sport and to be meaningful, everyone in the company must understand their responsibilities for living these principles. Below are examples of how we have translated these principles into practice.

Responsible

We begin by asking not only can we do something but also should we do it, before building any AI features. We work with external human rights experts to continually learn, grow, and discover new ways to protect human rights.

We educate and empower our customers to make informed decisions about how to use our AI responsibly. We will do this by creating tools and resources to help Salesforce employees, customers, and partners identify and mitigate bias in the systems that they are building and using (e.g., flagging when protected data categories and proxy variables are being used in a model, providing transparency into factors that most influence individual predictions) enabling customers and partners to understand the responsibility that they have to adopt AI in a safe and reliable way.

We adhere to the highest security and privacy practices to help anticipate and mitigate unintended harm and keep our products safe. We comply with applicable laws governing AI research and use. We also strive to meet the highest scientific and quality standards in our research, ensuring its safety and sharing it through peer-reviewed publications, conferences, and industry events.

Accountable

We engage with external human rights and technology ethics experts through our Ethical Use Advisory Council and workshops. We also invite feedback from our customers through Customer Advisory Boards and open dialog and incorporate it into our deliberation process.

We believe in the importance of giving back to our industry and society by collaborating with our peers through industry groups, civil society forums, and governmental organisations (e.g., US National Institute of Standards and Technology, US National AI Advisory Committee, Singapore's Advisory Council on the Ethical Use of AI and Data ) to continuously improve our practices and policies.

We enable employees to raise questions and concerns through channels like our anonymous online corporate reporting and governance system, Slack channels, and group email address.

Transparent

Transparency includes not only how we build our models but also why they made the prediction or recommendation they did. We publish model cards that describe how models were created, intended and unintended use cases, known ethical or societal implications, and performance scores. We also provide model explainability when an AI prediction or recommendation is made.

We enable customers to remain in control of their data and models at all times. The data we manage does not belong to Salesforce—it belongs to the customer. We also provide customers with a clear disclosure of terms of use and the intended applications of Salesforce’s AI capability.

Empowering

We strive to abstract away the complexity of AI to make it possible for people of all technical skill levels -- not only advanced data scientists -- to build AI applications with just clicks, not code. Additionally, we create and deliver free AI education via Trailhead to enable anyone to gain the skills needed for the jobs of the Fourth Industrial Revolution. This includes tools like measuring disparate impact (one definition of bias) and automatically populating model cards (like nutrition labels for models), as well as providing in-app guidance so customers know how to use our AI responsibly.

The Salesforce AI team is committed to delivering AI research breakthroughs to inform new product categories and ensure that our customers stay at the forefront of technological advancements.

Inclusive

We are all one. We are in this together.

At Salesforce, we believe the ethical use of advanced technology such as AI is an increasingly complex issue. It must be clearly addressed — not only by us, but by our entire industry. We welcome a multi-stakeholder dialog that includes our employees, customers, partners, and communities.

By coming together to solve emerging challenges and ensure that these new advances take diverse experiences into account, we can drive positive change with the power of AI – and drive the development of AI with the perspective that help us make the most of human potential.